ESTIMACIONES DE LA PRECISIÓN DEL MAPEO DE LA COBERTURA DEL SUELO POR EL PROYECTO MAPBIOMAS

ACCESO AL PANEL DE ESTADÍSTICAS DE LA COLECCIÓN 3.0

El análisis de precisión es la principal forma de evaluar la calidad del mapeo realizado por MapBiomas. Además de decir cuál es la tasa de aciertos general, el análisis de precisión también revela estimaciones de las tasas de aciertos y errores para cada clase asignada. MapBiomas evaluó la precisión global y para cada clase de uso y cobertura para todos los años entre 1985 y 2024.

Las estimaciones de precisión se basaron en la evaluación de una muestra de píxeles, que llamamos la base de datos de referencia, que consta de ~ 71,500 muestras. El número de píxeles en la base de datos de referencia fue predeterminado por técnicas de muestreo estadístico. En cada año, cada píxel de la base de datos de referencia fue evaluado por técnicos capacitados en interpretación visual de imágenes Landsat. La evaluación de precisión se realizó utilizando métricas que comparan la clase asignada con la clase evaluada por los técnicos en la base de datos de referencia.

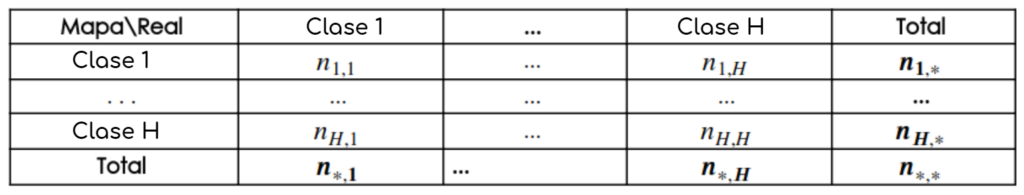

Cada año, el análisis de precisión se realiza a partir de la tabulación cruzada de las frecuencias muestreadas de las clases mapeadas y reales, en el formato de la Tabla 1. Las frecuencias ni,j representan el número de píxeles en la muestra clasificada como clase i, y evaluados como clase j. Los totales marginales de líneas ![]() representan el número de muestras mapeadas como clase i, mientras que los totales marginales de columnas

representan el número de muestras mapeadas como clase i, mientras que los totales marginales de columnas ![]() representan el número de muestras evaluadas por los técnicos como clase j. La tabla 1 se llama matriz de error o matriz de confusión.

representan el número de muestras evaluadas por los técnicos como clase j. La tabla 1 se llama matriz de error o matriz de confusión.

Tabla 1: Matriz de error de muestra genérica

A partir de los resultados de la Tabla 1, las proporciones de la muestra en cada celda de la tabla se estiman por ![]()

![]() . La matriz de valores

. La matriz de valores ![]() luego se usa para generar:

luego se usa para generar:

- La precisión del usuario: son las estimaciones de las fracciones de píxeles de la asignación, para cada clase, clasificadas correctamente. La precisión del usuario está asociada con un error de comisión, que es el error cometido al asignar un píxel a la clase i, cuando pertenece a otra clase. La precisión del usuario para la clase i se estima mediante

y el error de comisión por

y el error de comisión por  . Estas métricas están asociadas con la confiabilidad de cada clase asignada.

. Estas métricas están asociadas con la confiabilidad de cada clase asignada. - Precisión del productor: estas son las fracciones de muestra de píxeles de cada clase asignadas correctamente a sus clases por los clasificadores. La precisión del productor está asociada con el error de omisión, que ocurre cuando no podemos asignar un píxel de clase j correctamente. La precisión del productor para la clase j se estima por

y el error de omisión por

y el error de omisión por  . Estas métricas están asociadas con la sensibilidad del clasificador, es decir, la capacidad de distinguir correctamente una clase particular de otras.

. Estas métricas están asociadas con la sensibilidad del clasificador, es decir, la capacidad de distinguir correctamente una clase particular de otras. - Precisión global: Es la estimación de la proporción de corrección global de los clasificadores. La estimación viene dada por

, la suma de la diagonal principal de la matriz de proporciones. El complemento de precisión o el error total

, la suma de la diagonal principal de la matriz de proporciones. El complemento de precisión o el error total  todavía se descompone en desacuerdo de área y desacuerdo de asignación1. La discrepancia de área mide la fracción del error atribuido a la cantidad de área asignada incorrectamente a las clases por el mapeo, mientras que la discrepancia de asignación a la proporción de errores de desplazamiento.

todavía se descompone en desacuerdo de área y desacuerdo de asignación1. La discrepancia de área mide la fracción del error atribuido a la cantidad de área asignada incorrectamente a las clases por el mapeo, mientras que la discrepancia de asignación a la proporción de errores de desplazamiento.

La matriz también proporciona estimaciones de los diferentes tipos de errores. Por ejemplo, es posible ver a través de estos la estimación de la composición del área de cada clase asignada. Por lo tanto, además de la tasa de éxito de la clase asignada como bosque, por ejemplo, también estimamos qué fracción de estas áreas puede ser pastos u otras clases de cobertura y uso de la tierra, por año. Entendemos que este nivel de transparencia informa a los usuarios y maximiza el potencial de mapeo de varios tipos de usuarios.

SOBRE LOS GRÁFICOS

ESTADÍSTICAS GENERALES

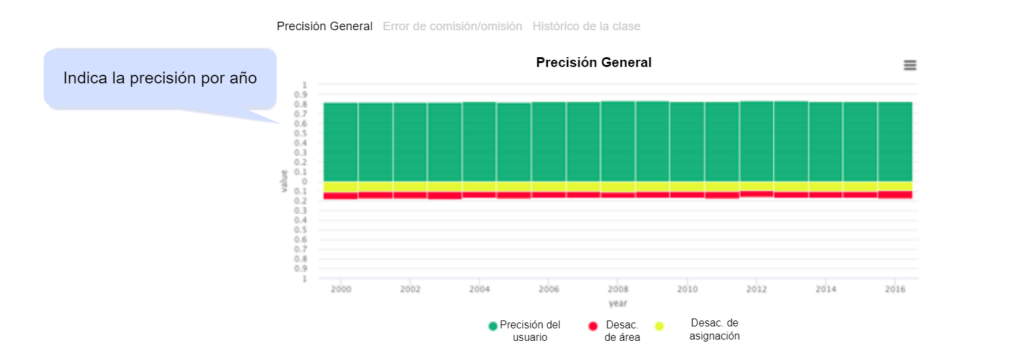

Muestra la precisión total anual promedio y el error descompuesto en desacuerdo de área y de asignación.

- Gráfico 1. Gráfico de precisión anual total

Este gráfico muestra la precisión total y el error total por año. El error total se desglosa en desacuerdo de área y desacuerdo de asignación. La precisión se representa en la parte superior y los errores en la parte inferior del gráfico.

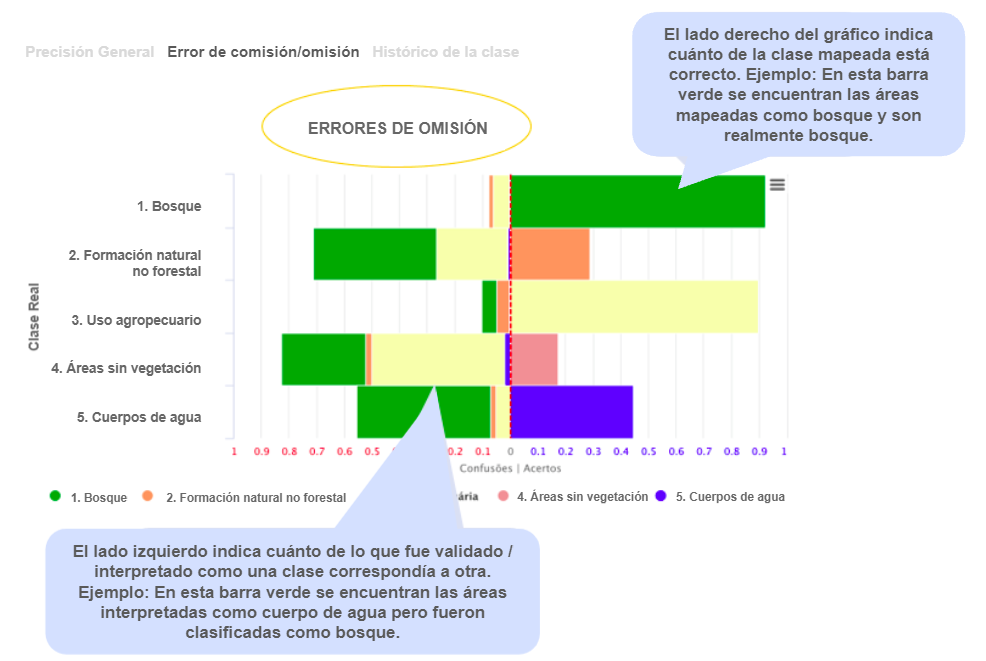

- Gráfico 2. Matriz de errores

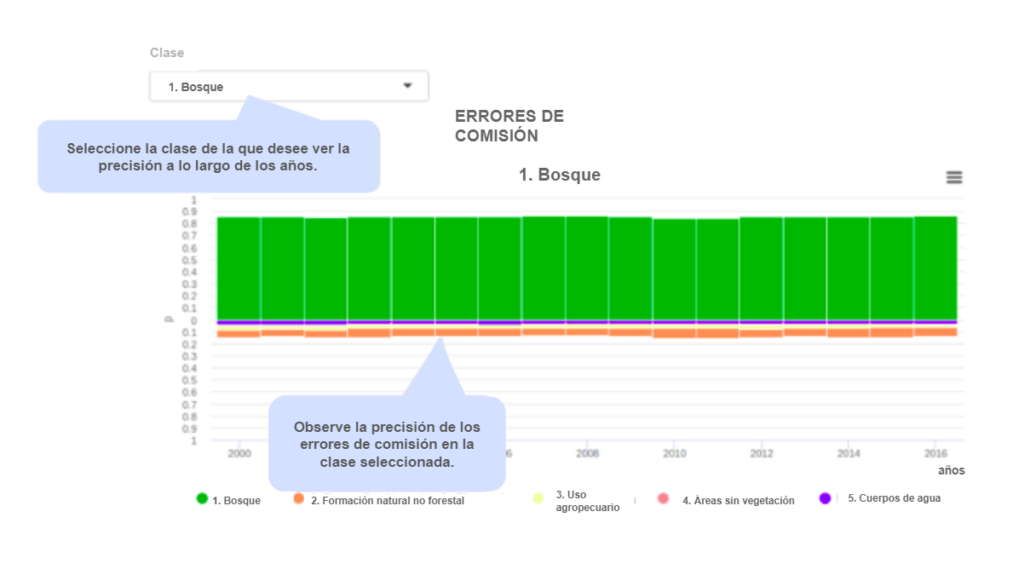

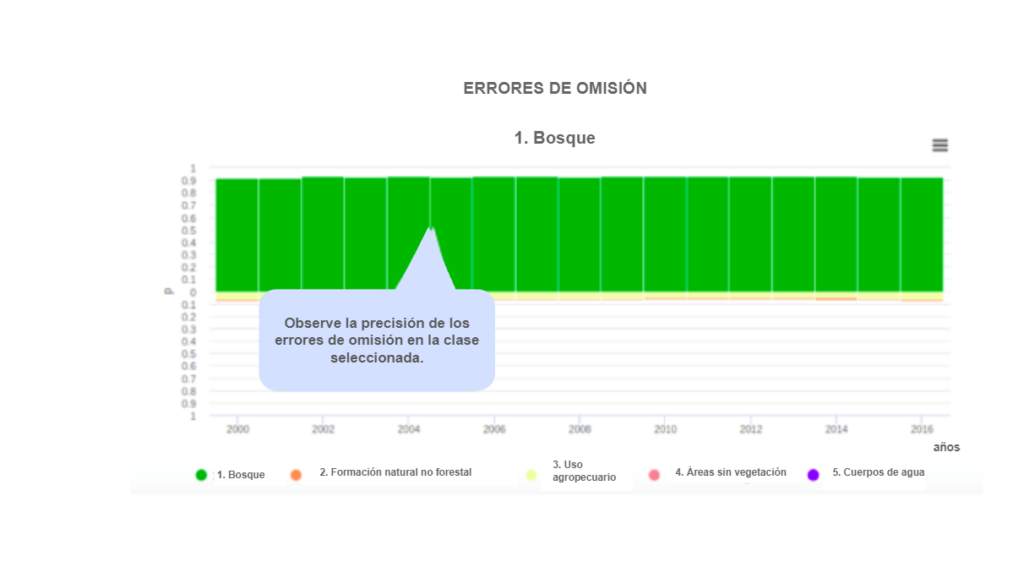

Este gráfico muestra la precisión del usuario, el productor y la confusión entre clases, para cada año. El primero muestra las confusiones de cada clase asignada. El segundo muestra las confusiones de cada clase real.

- Gráfico 3. Historia de la clase

Este cuadro le permite inspeccionar las confusiones de una clase en particular a lo largo del tiempo. Se muestra la precisión del usuario y el productor para cada clase, junto con las confusiones en cada año.